The Emotional Economy

AI took care of it. And I felt nothing.

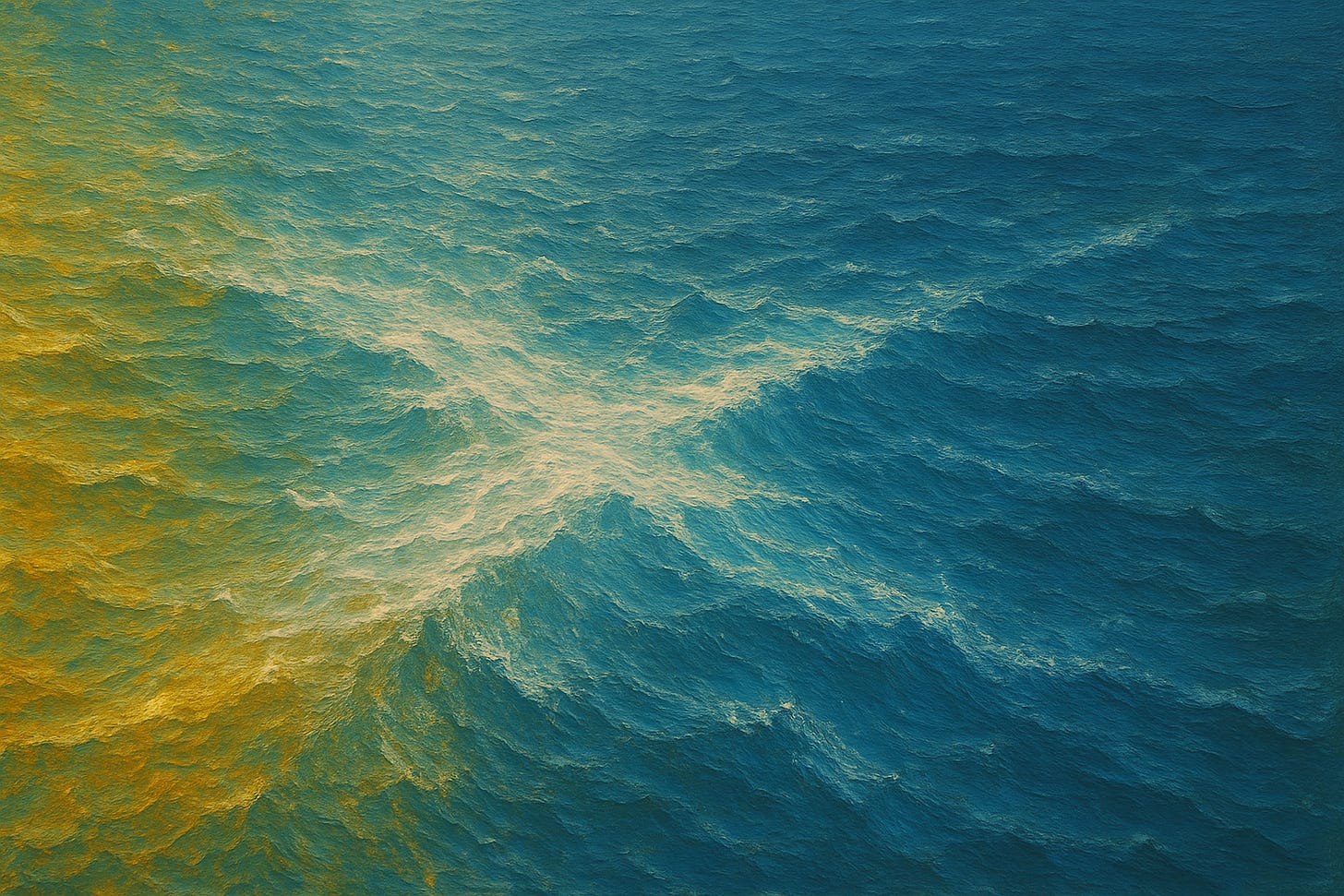

(Image generated by ChatGPT, prompt below.)

I opened my inbox this morning and saw the future staring back at me.

It was a short email thread—a simple back-and-forth between a client and someone on our team, just clarifying a few next steps in an onboarding process. But I never actually read the emails. I didn’t need to. At the top of the chain was a Gemini-generated summary that gave me everything I needed to know. It was clean. Efficient. Accurate. Impressive.

And—completely empty.

The summary nailed the what. It gave me the how. But it gave me none of the why. No emotion. No friction. No hint of whether the conversation was cordial or strained. Whether our team member was showing up in a way that lived our values. Whether the client felt seen.

It saved me time, yes. But it cost me presence.

That’s when the real thought landed: If AI keeps getting better and faster at summarizing the what and the how, what’s going to be left for us to do?

That’s not a rhetorical question. It’s the existential crossroads of our time.

We are standing on a binary edge—between outsourcing the soul of human work or reclaiming it. Between a world optimized for output and a world designed for meaning. Between the mechanical and the emotional.

I’ve started calling it the emotional economy.

It’s not a market of sentimentality or soft skills. It’s a deeper shift—a new economy based on the value of what only humans can feel, interpret, and convey. The little signals. The tone. The discomfort. The pause before the reply. The courage to ask a better question. The vulnerability to admit, “I don’t know.”

These things aren’t “intangibles.” They are the very thing machines can’t replicate—not because they’re not smart enough, but because they don’t have a self. No ego. No shadow. No soul to grow.

The transition will be brutal. The dislocation will be widespread. What we’re seeing now—tools like Gemini, ChatGPT, Copilot—is just the appetizer.

Everyone is going to have to ask themselves:

What does it mean to be human now?

What do I offer that a machine can’t?

Not as an abstract philosophical exercise, but as a lived, professional necessity.

Terrence McKenna once said that evolution doesn’t push us from behind, it pulls us forward. Toward greater complexity. Toward consciousness. Toward something we can’t yet imagine.

That idea has stayed with me for years. And while I can’t say for certain that’s where we’re headed, it feels true. And over the past 25 years, the most important transition I’ve made—one I’m still making—is learning to trust what I feel more than what I think.

It’s slow work. Messy work. But it’s human work.

And that, I believe, is the work we’re here to do.

Image Prompt: An abstract oil painting of a crossroads where golden and blue waves collide, symbolizing humanity at a turning point between emotional depth and mechanical efficiency, evoking the messy transition in the nature of work. The prompt seems quite empty, doesn’t it…? Unless you’re actually at the center, experiencing it.

Jonathan, you have exposed the very heart of what everyone is feeling. I had to buy a new garbage disposal this week. I chose Home Depot. No one could tell me which to order. I researched and found the correct one. It took my Plumber and I over 1 hour to figure out which new disposal would perform like the previous one which had broken. The comment we made to each other went something like" We have to do the job of customer service to spend money at Home Depot". ughhhh

Thank you Jonathan for an insightful post and for asking questions that shake us out of the comfort that AI automatically brings… It is easy, fast, and ready. But as you say, it is missing our assessment, our point of view, our essence. I see this “comfort” (I call it laziness in me), when AI is faster than I at generating a descriptor and narrative for a new painting I am uploading. I have made the mistake of using the proposed verbiage. It sounds neat —and mechanical. It sounds efficient —and not authentic. It is fast —and fails to capture emotion and nuances. Like in your Accountability Dial, we need to find the sweet spot somewhere in the middle. We need to use AI as a tool, as a scaffolding structure to capture the What, but the conversation within ourselves and with people, requires a thoughtful Why. The Why makes us unique since our values, experiences, and behaviors are a blend that cannot be efficiently summarized. Thank you @JonathanRaymond for helping us pause, and check in with our humanity.